I have been “greenlit” by #newdegreepress. They’ve reviewed my work so far on my book “The Fallacy of Laying Flat”. I’ve got the words, the format and the concept in control and on track. My first draft is due late February for publishing by the fall of #2022. Wish me luck! This is tough stuff!! I couldn’t have done it without the wisdom and support of #bookcreators and #georgetownuniversity. #publishing #authorlife #bigdata #decisionmaking #decisionintelligence #data4good #data

The physical and the mental . . . and the Information in Between

Chapter X of The Fallacy of Laying Flat

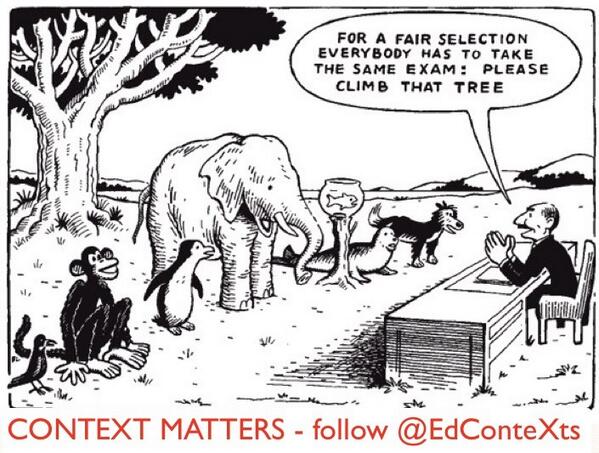

Before we delve into the art and science of how Big Data derives solutions, let’s look at the problem space itself. We err with small data decisions in a Big Data world. As Big Data capability evolves, it should be moving out of the flat into structure. The following classifications evoke the thinking needed to stretch data sets into approaches that provide more productive and realistic decision capability. These ways and means are not being applied today as they could and not progressing as they should be.

Problems have a spectrum of simplicity and entanglement. Figuring out world peace isn’t the same as what’s for dinner. Big Data hasn’t really changed the essence of some of those problems or the schema. Finding a plumber or combating global pandemic is still a slate of options and contingencies, just with more information.

Big Data though provides so much information that it needs to be interpreted differently than the ways we have previously used small data. The data sets are not just exponentially larger; they are continuous and “always on”. They cost less to capture and process; they’re not the expensive discrete points of little data. Big Data is variety too – images and videos and texture beyond the characters and digits to which humanity has only been availed previously. The ability to capture and manipulate information is extraordinary. Machines are learning from us and their intelligence artificial – by our hands from our minds.

Big Data doesn’t fit into excel spreadsheets. How we interact and interpret data is both divergent and nascent. We will talk more about what those differences are but for now we’ll break down the problem space into categories of how they occupy mental space. Basically, how we understand a “problem” needs to grow from what standard concepts and practices are now.

The constraints of the physical still cannot be overcome without physical manifestation, but In a Big Data world, that interaction is so much more augmented with information. You still have to jump to catch a moving train but the ability to catch it is much easier, more predictable, repeatable … rapid… with Big Data.

So let’s take a look at this Big Data rendition of examining problems – in four dimensions. The concept is to realize the “space” for decisions in an ever-increasing information world. As we use data more and more naturally, the problems adjust from single and two-dimensional thinking to more robust insight and action. For now perhaps the characters are overwhelming. We ride upon the churning waters of the ocean. The depths below contain mystery, and danger and possibility. We stay afloat by what we can see and feel and touch and by any and all means to survive or gain advantage. Soaring overhead is yet more potential – the ability to fly above it all.

The potential of Big Data is to observe and eventually coalesce the other domains that are tangent and perhaps critical to survival. It starts with one . . .

ONE – A Point in Time

The ONE dimension problem is a single moment. What to have for breakfast? Take or reject a job offer or a new relationship or a moral judgment. The classic scenario is “this or that” – the famous road diverging in a yellow wood. Robert Frost penned an iconic work of literary art in “The Road Not Taken” for choosing one path or another to symbolize the decisions we make in life. Standing at a fork, you can look down either of two roads and ponder what is or isn’t there. Most likely the traveler cannot return so the decision may be lamented afterwards, perhaps years or even a lifetime. Robert Frost knew FOMO well ahead of the Information Age.

A specific pivot point in time, it seems singularly lacking any width or depth of character or choice. Although we usually see the point in time as an either/or solution set, it has potential in an infinite set of choices in any direction. Given the opportunity to stop and derive solutions in place, most likely you can only see a couple of options.

I’d argue though that standing at that point, there are far more than two paths. The traveler could stand still or sit down and wait until something or someone came to assist or order or demonstrate. The traveler could decide not to take a road but instead trounce through the underbrush. Perhaps he or she could even turn around and go the way they came.

A point in time has a solution in multiple – or perhaps infinite – directions. We often shave the choices down to two or three, which is poignant for advancing through the dimensions of problems. In business decision etiquette presenting more than three courses of action usually shows less than thorough research of the issue and a lack of ability to present concise thinking. Perhaps the broader scope of options is too much. Perhaps many of the choices are not even considered consciously or subconsciously because of undesirable effects. Embedded prejudices and experiences alter the frame of acceptable choices. We carve down the infinite set into tangible options from learned experience, which is often good but not always. We also eliminate what we don’t see.

Keep these limitations in mind as we step further into the TWO dimension problem.

TWO – The Plan

November 9th 1965, New York City and areas with over 30 million people and 80,000 square miles miles of civilization underwent an electricity black out of epic consequence. The city was without power for an unprecedented 13 hours. Heavy investigation into the circumstances traced to a single point of failure – a safety relay that tripped as programmed. The relay opened the line disrupting power because of the heavy demand signal. Unfortunately the load was a “normal” albeit extensive load on the line due only to heavy usage from deep cold, and not the catastrophic power surge it was designed to prevent. A waterfall of effects followed as the excessive load now shot to other re-directed lines, likewise shutting them down.

This interconnected network poignantly was created in order to prevent blackouts instead of beget the proliferation of overload trips. Also of note were the exceptions – numerous “islands” were able to escape the blackout via having the right off/on switches. Staten Island and parts of Brooklyn evaded the effects when people with quick thinking disconnected them from the grid before the programming shut them down.

It’s complicated

The TWO dimension problem is complicated but not complex. Complicated is likened to a tangled set of corded earbuds that you pull out of the backpack. Always wadded into an artistic nest, it takes a minute or so to unravel, but then the cords are “laying flat.” The power loss wasn’t lack of energy but the distribution and safety systems in place. The solution was derived from unraveling the knot of the issue to an ah-ha moment. The plan works but it’s not perfect.

The TWO dimension problem is characterized and best recognized by its product – a PLAN. This is the lion’s share of today’s active problem solving. Two dimension thinking works best/most with complicated but not complex problems. If you can press down the frayed and curled edges of the PLAN, the map is “laying flat” and everyone can read the landscape and follow the directions. The map nails down points of interest and position and context and perhaps some texture. “The map” in your organization is a powerpoint brief or spreadsheet or POAM (Plan of Action and Milestones) or a policy document or any of a plethora of business planning products.

One of many challenges with The Plan is its limitations for capturing the situation. Most often it appears dumbed down to make it actionable, but accordingly, it is ignorantly sourced.

Flattening the curve for COVID-19 was quintessential decision making within the plane. Positive cases and death counts tempered against healthcare resources of bedspace and ventilators were used to forecast the ability to handle ill patients. The data points told us something. The goal became keeping the numbers from exploding. The desired effect was keeping hospital resources from being overwhelmed. The public centered on those numbers, weighing success and failure from the daily counts.

The numbers were scalar. They didn’t address the defiance within gradients of symptoms or effect of measures put in place. It didn’t include testing practices or policy or most poignantly, data sources for the efficacy of all of the above. The data most assuredly had considerable variation and instability (better known as “noise” in data talk); however, the numbers became ground truth – firm footing for taking next steps. The reality is those numbers can never explain the effects. The numbers were mistaken proof of themselves instead of the reality of complexity which was too difficult to maneuver or provide guidance. (See “Will I die of COVID-19?”)

It’s classic causality error. Does US spending on science, space and technology increase suicide occurances?

So causality often uses numbers for quantifying things that are a bit fuzzy. But when is it causality or its misunderstood cousin – correlation? This graph represents a strong correlation between US spending on science and technology and an increase in suicide by strangulation. A strong correlation does not mean that financing more STEM leads to more suicides. That’s the difference between correlation and causality, a fine line we are able to appreciate given an obvious scenario.

Looks like a dead give away. We can save lives and dollars by cutting resources to the favored STEM programs. This is an OBVIOUS example of representation of data that shouldn’t plan anything but a casual remark of “interesting.” When data sets are not so obvious and life and death are on the line – as with COVID-19 – it is hopefully easy to see how important understanding data interpretation is to global pandemic response. Like a smooth talking salesman, the “truth” can be manipulated.

Lots of buts

This TWO dimension of decision space is where the law of averages and linear thinking nestle in and take over. Laying flat nurtures a confidence in comprehending the problem and the expected solution – that may or may not be reality. It inspires conviction in the linear logic of IF>THEN. Causality is the greatest desire and yet the most elusive truth. If bee stings or certain foods or situations cause an allergic reaction, then avoiding it prevents the pain. if you stick your finger in an electric socket, it’s going to shock you (or kill you depending on the socket.) Got it.

Then you slide toward more generalized IF/THens. These have subtle rules embedded in their sequence. If you wear your seatbelt, you have a better chance of surviving an accident, but seatbelts don’t ensure survival. If you complete a college education, will you make more money? Most likely, but not absolutely and the increase in college debts makes the uphill climb more monumental. And that logic doesn’t apply to Bill Gates and Steve Jobs (among a sometimes surprising list of stellar dropouts). Most laws and policy follow this gradient of IF/THEN. Whatever is generally good for one or for most is applied to support the greater good.

Drunk drivers come in all ages; however, raising the drinking age has had a lasting impact in reducing alcohol related car fatalities. Turning 21 years old doesn’t have a miraculous ah-ha moment of responsibility but eighteen year olds collectively and decidedly do not.

Then there’s plenty of insanely simple and wrong examples of causality. If a woman floats, she must be a witch; otherwise, she drowns. If everywhere you look is flat, then the world must be flat and sailing too far into the sunset risks falling off. We may be more “enlightened” now but we apply the same logic with today’s issues. COVID-19 included.

Normally we would

The law of averages is another deeply ingrained mindset within TWO dimension. We tend to lace over design with the bulge of the bell curve, regardless of its application to the situation. The previous examples are just a couple of ways that outliers foul the comfort of “average.”

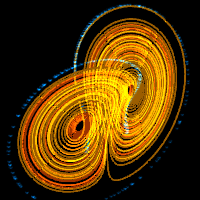

The natural setting though is often nonlinearity. Logarithmic, power, and exponential relationships are the prevalence of many phenomena. These are “tipping point” behaviours and seemingly sudden or unexpected permutations – exactly what bites when you are thinking linearly.

It’s very comfortable in the map but the real world will never become the representation we create. Wishful linear thinking and projected norms are not reality. Breaking this plane of thinking takes training, tools and systems that support the non-linear, chaotic world that is constantly surprising us with its bends and tricks.

Getting to the next dimension is critical to utilizing the data capabilities at hand as well as those evolving. Let’s go there now.

THREE – Out of the plane and into the fire

The THREE dimension solution set pulls out of the plane and tackles complexity. Wherein complicated can be untangled, complexity problems do not lay flat. The variables continuously morph, pressing in or fading out of contention for individual or shared interest. With complexity, the set of earphones would change length or separate and reattach (or not) or switch color or stretch/compress; other people would be trying to fix them for their benefit and your detriment (or not.) Complexity isn’t just a problem with too many moving parts; complexity players and events enter and exit the problem space with disparate probability sets of their own. The plan can never be laid on a table and “flattened,” such as traffic and nature and happiness. Or global pandemic.

The THREE dimension problem can be bounded, but the space is vastly larger and boundary control is a variable of itself. Suspending the system for evaluation does little to preclude the participation of influencing factors. Like slicing an integral to examine an instance, freezing traffic for a moment or an hour or any time increment does only little to dilute its effect or understand the issues. Just as a road construction project creates a suite of ill effects to be mitigated, bringing economies to near all-stop has consequences too. We plan for such a stoppage with road construction but it doesn’t prevent the need for repairs in the first place, nor the resulting pile ups that will happen when it is underway.

The leverage in understanding traffic is within the flow – a vector quantity of magnitude and direction. Traffic doesn’t cease to exist because more information defines and tracks it. You don’t solve traffic so much as assuage its effects. Big Data learns to avoid or go around traffic if possible. That flow itself inserts influence on the problem set.

Boundary lines

To give a simple visualization of a complex problem, consider the systems engineering example of air conditioning a space. A box is created to make a comfortable space for some creature to inhabit (and possibly WFH). It has walls and ceiling and floor to contain the desired temperature, but it also needs a door – for food and water and mental relief and other assorted desirable bodily needs. How does air conditioning it work? How much energy is needed to sustain the effort? What state is necessary to survive or to thrive? Like the 3 little pigs, will it be made of straw or sticks or bricks? Who’s going to pay for it?

Will the walls be thick or thin? Wherein, no insulation permeates heat, insulating a room to even extremes still subjects the contents to weather because it exists on earth that has variable weather. Think about it. The more the problem is mitigated only increases its dependence, in this case – on the weather. Trying to “control” the weather only makes the problem more subject to it. The internal condition is influenced by the external conditions.

This case also visualizes the boundary passage aspect. Physically entering and leaving is necessary for utilizing the space; otherwise, why have the space? Air flow must pass the boundary as well for the heat exchange necessary to maintain the desirable temperature and utilization of the space. And there will be inefficiencies, waste, and slippage.

Note too that this example is “solvable” – with a given tolerance. I can set the control to 74 degrees. The “answer” is an easily established and visualized integer representing a desirable outcome. There are several feedback loops but making a decision, such as putting the thermostat at 74, establishes a policy that will not be instantaneous. The effect depends upon the system working as anticipated within a certain time period. That may or may not happen depending upon the physical operation capabilities of the system and the less measurable patience of the participants. Is there sufficient funding and engineering to design, build and then maintain that solution?

Although a solution set of options attends the problem, the solution sets are multi-dimensioned. Each has time, cost, risk and opportunity in addition to width and depth of variables. The matrix likely has gray strata of pros and cons, at least some of which are subjective. If the desired state is comfortable living conditions, does “74 degrees Fahrenheit” maximize that accomplishment? Other variables are pressing. How great a variance is tolerable, detrimental, disastrous?

What It Takes

Solving a problem or preventing a disaster in three dimensions has several important components. First, there’s never a stasis. Entities as well as the influencers and structures are fluid, and not necessarily predictable or linear or normalized. The solution space has volume and does not remain within a plane; it is unlikely to become flat. If it does, the moment is measured but not enduring.

Studying three dimension problems is about utilizing vector quantities to appreciate the flows. The solution set is subjective measurement with associated tolerances, costs, and risks. “Acceptable” is both subjective and variable, although it can be measured and visualized.

Finally, the THREE dimension problem has to be comfortable with the gray and oh-so fuzzy in order to attain desirable effects. “74” is a number attached to a goal, but it’s not the end state. “74-ish” likely describes its acquiescence to variables observed or mitigated.

The next dimension pops out of the box.

FOUR – A Stitch in TIme

The morning of September 8th, 1900, was a mild, partly cloudy day, with beach dwellers lingering to enjoy the surf of Galveston, Texas. The peaceful day was disrupted by a local weather forecaster on horseback riding the streets sounding the alarm of impending disaster. Whether they heeded his warning or not, the day was the first of several days unloading all the hurricane forces feared. Most fateful was a tidal surge of over 15 feet, easily covering the entire island’s paltry 8 feet above sea level. Buildings simply floated off their foundations and crashed like bumper cars into other buildings. The death toll of 6000-10000 lives still remains the worst fatal US natural disaster.

Like perhaps all disasters, there were signs and signals that were either ignored or denied or incorrectly interpreted or promulgated. Data points indicated potential hurricane capability, but sparity of sources and lack of communication left them adrift to be tossed in the consuming waters. The local forecasters actually broke policy by announcing the impending hurricane disaster, which dictated that warnings could only be broadcast with national center blessing. The reparation is decades of research and reform and refinement developing some of the most sophisticated forecasting and modeling on the planet – hurricane tracking.

Predicting hurricanes is not like finding out today’s chance of precipitation. It requires a suite of forecasting tools. First, the atmosphere movement is captured via supercomputer dynamical modeling. Then hIstorical models consume all the behaviors of past hurricanes to project active storm potential. Add to that trajectory models that focus solely on predicting the eye over land and ground. A slate of surge, wave and wind models each work to predict the variety of storm forces. Statistical-dynamic models encompass the influence of those two types. Finally ensemble forecasts incorporate a suite of models.

Multiple approaches utilize different but sometimes overlapping data sets to tackle pieces to the puzzle. Only the most powerful tools can even attempt to consummate the results. For now too, trying to put all the factors together for a comprehensive picture is likely to dilute accuracy from the parts. We’re still not there yet.

Where the rigor meets the road

The result of using multiple models with competing and converging resources and reasons is the FOUR dimension problem space – building the plane as it flies – working all the issues of three dimensions in the reality of the march of time while handling injections and nonlinearity. The solution set for the four dimension problem is another integration up. Whereas the THREE dimension problem captures complexity, this dimension captures the lags and leaps of acceleration and unknowns.

Four dimensions is where the issue is most closely mapped to the territory. The models have fidelity and tangency and vibration. The result is never a “map” and surely, the body and edges never lay flat. The potency is vigilance, iteration, and constant tweaking of all the resources available. The boundary flickers and moves.

Modeling is rigor. Creating the model, especially in four dimension, exacts a deliberation in understanding the facets and facilities surrounding a problem as well as potential solutions. Only through this work can the intricacies and complexity be fathomed. The model itself becomes a talking point – an opportunity to share and critique. A model engages players and encourages interaction. The model extracts data from the Big Data world. It incorporates the “corporate knowledge” of its keepers, and it manipulates the interpretations, which are multiple and varied for most likely and most deadly. Taskings evolve from the model and return to add to its color and texture.

Get it

This is the essence of Big Data problem solving – humans leveraging knowledge through technology. It extends the Industrial Age to the Information Age and keeps going. This bridge from possible to actual is delicate and yet formidable.

It’s delicate because building the best modeled problem does not necessarily offer the most ideal solutions. Models are representations of the world – or the portion thereof that we want to control or correct or predict – but they are not reality. “Confusing the map for the territory” is a siren to which all humans are drawn. It is primal behavior. The model will never be reality though. Models are tools for working a problem. Recreating a problem set to exacting proportions results in . . . another world to manage. Plus, being humans integral to the system itself, artificial intelligence development has proven we add our own delusions through bias and conscious and subconscious interpretation.

That doesn’t mean it’s useless; it’s the algebra that needs to be done. And by algebra, I mean it’s the math not enough people can do. The FOUR dimension problem is the real world and it’s really, really messy. It’s full of noise, non-linearity, and sensitivity to initial conditions. The four dimension problem is where testing reality lies. We probably can’t solve problems in the four dimension with today’s science, technology and resources but this dimension is the evolution or perhaps quantum leap toward that capability. All problem solving isn’t about recreating the situation but manipulating it for desirable effects.

“You can’t navigate well in an interconnected, feedback-dominated world unless you take your eyes off short-term events and look for long-term behavior and structure; unless you are aware of false boundaries and bounded rationality; unless you take into account limiting factors, nonlinearities and delays. You are likely to mistreat, misdesign, or misread systems if you don’t respect their properties of resilience, self-organization, and hierarchy.”

It could be argued all problems exist in the FOUR dimension because time affects us all whether we recognize its influence or not. Not all problems though need the special effects of FOUR dimension. Some problems may recur or morph slightly over time; the risk and return of the solution though does not vary sufficiently to warrant FOUR dimension analysis.

This dimension also ventures to see the edge of chaos. Greater complexity systems study actually reveals that strategic intuition often pushes the lever the wrong direction from the desired outcome. Studying FOUR dimension problems and models is about trial and error and observation.

∑ – In the End

The answer is there is no answer. Not ONE like we want it to be. But we need to keep evolving with the Big Data world.

These four dimensions are hardly the best definition of problem solving. Many, many more academics can slice and dice and better explain the logistics and psychology of how and why attack an issue but this book will return to the factor that we are making small data decisions in a Big Data world.

Breaking down problem space into dimensions demonstrates at what level we are currently stuck as well as the potential of stepping up a dimension. The tools and systems we use now are based upon training and education with small data interpretation. Realizing the next dimension has greater insight and better prediction capability is a template for designing new tools and systems that do solve the more complicated and complex problems that can be tackled with Big Data.

It’s not just a cool factor of utilizing emerging technology. Big Data is necessary for solving the problems of a Big Data world. We had to stop the world to stop a pandemic. We can count positive cases and recoveries and deaths, but that doesn’t portend the effects of economic impact. These numbers shape the iceberg as seen from the surface. The impact of hunger, substance abuse, unemployment, depression, and less apparent “excess” death rates are under the water.

Using those numbers is valuable for comprising algorithms and assessments for short and long term reverberations. Those numbers in combination with other Big Data sources can build scenarios for rebuilding and resurgence. What if this pandemic or the next one was much worse?

The Navigator’s Balls

So let’s consider the ancient mariners. Four thousand years before Christ, seafaring souls took to crossing waters while hugging the shoreline. A couple thousand years later, they used the stars and developed tools to create maps. (Yah, I totally believe it would take hundreds of years of staring at the stars to figure out how to navigate by them.) The two-dimension problem – here to there – was to facilitate commerce. They risked because the reward was financial. But predicting the weather over the horizon – that was the gods’ will.

Going back a couple hundred years to the sailing days of Columbus, they didn’t know the world was round, but they could use the very crude data of falling barometric pressure to appreciate weather not yet seen. They didn’t understand exactly why but falling pressure meant prepare for the worst. Not an easy signal to find but once discovered, they knew the consequences. We need to figure out Big Data barometry.

Big data and its solutions are not going to look like what we have been doing or the type of results we have gotten. Today we have very sophisticated means to predict the weather – supercomputers and Big Data – but although the local forecast is usually close, it’s rarely 100%. As the forecast stretches further out into the future, it becomes less and less accurate. That’s the world of chaos and a whole additional elephant in the room that comes later.

So, there’s a lot of room for opportunity to grow, which is a nice way of saying we fall short of a lot of ways to solve problems. When 95% of the world we have yet to understand, solutions are raison d’etre. Life exists in understanding our world and marveling at the accomplishments of our creator – whomever you choose. Einstein quipped that if given an hour to solve a problem, he would spend 55 minutes studying the problem before 5 minutes of conjuring solutions.

The call to action with this book though is the data world is amassing information much more ominously than we are adapting to using its power. When we still use small data tools in a Big Data world, it’s bringing a knife to a gun fight.

Next we will explore what it takes to break the plane. These new decision spaces demand things we are not used to needing or accepting while creating a solution. Then we will dive into the triad of tools, training and systems that are needed to implement Big Data solving Big Problems

Does Amazon Know What’s In Your Bank Account?

I watched a marketing webinar last night.

It was free. Typical of that genre, the point was to give away some information but sell you on a product or service that will give the rest of the picture. That seems a good trade off considering their time and effort put into the creation of the webinar. In return, learning from their experience made my time and effort watching it worthwhile. That’s a fair market return.

The presentation wasn’t interactive other than the pop up order form at the end. So it was basically a recording, once produced it can be repeated with only the additional broadcast cost. From what I can tell, it is played twice a day, most likely for a “limited time.” The product being sold is more of the same information. More extensive knowledge and examples are digitally boxed for download. Some level of customer service is implied.

For me, it potentially could be a good product.

The topic – Marketing – isn’t my strong suit, so I make an easy, albeit skeptical, target. I might have bought it … but the price was a bit more than what I would spend. I have a technique for shopping where if I like something enough to want to purchase it, I mentally calculate what it is worth – to me. The process includes thinking about my bank balance, the relative “need” for the product or service, how long it will last, will it pay me in return, alternative resources that may or may not do the same things for more/less time and/or money. The final consideration is Quality of Life – will I be happier/faster/slower/better for having purchased it. Whether a house or a pair of shoes, I scramble all that data to run it through the gonkulator that is my brain and contrive a cost I am willing to pay.

Then I look at the price tag.

Marketing & Economics 101

Ah price point – where supply and demand meet the wealth of nations. Wrigley made a fortune from penny sticks of gum. Today’s $.99 ebook downloaded a million times is close to a million dollars (depending more on its one time set up cost.) On the other end of the spectrum, the multi-million dollar yacht salesman needs 3 to hit the annual sales target. One puts him out of business and five will hold over for another year.

Adam Smith touted that without the tethers of government interference, private monopolies, lobbyists or “privileged” entities, the free market wields the perfect price where supply and demand meet. When banana crops fail, the price of those that make it to market goes up enough to meet whomever is willing to pay for more expensive bananas.

I’m sure he would have been spellbound by the much more elaborate threading of airline flight tickets. An incredibly (I don’t use that word lightly) sophisticated algorithm cultures those seat prices like a mother hen, sub-minute by sub-minute searching databases and optimizing just how much the seat should be priced. Would Mr Smith’s contemplations and calculations hold under the intense smoothing of the pricing integral? His theories have been smeared all of the earth with both creamy taste and reputed disgust.

He would’ve been further floored with the near-zero production costs of electronic products and services, although I’m sure he’d have much to say on it. These are the elements of economic evolution that test free market capability in a global economy.

Big Data Price Point

Of course, you probably wouldn’t be here reading this unless you’re more interested in Big Data than my spending habits. This is hardly business continuing education credit either.

But these “small” data examples are all market driven. The future – the Big Data Price Point – is the price will shift according to your ability as much as willingness to pay. That means the webinar product that I didn’t want to pay $497 would have been further discounted to the $247 that would have made me uncomfortable but ready to use my credit card.

How is this possible? Supply and Demand. For one, it is a product with almost zero production cost. Unlike the widget which requires materials, labor, manufacturing, storage, and distribution. The digital warehouse doesn’t need a water supply or a janitor. The one stored copy (and backup) replicates instantly. There is a set-up cost; the knowledge must be conveyed creatively. People are needed to develop the product which must be marketed and maintained. The elegance of the digital effort minimizes the cost as the theoretical sales count grows infinitely. This is not a scenario Mr Smith would have anticipated.

As for demand, Big Data Price Point (PP) kicks in by knowing YOU along the same lines I mentioned that I use to make a purchase. Big Data PP would figure out if I have enough cash or credit for the sale. Big Data PP knows if I spend money on these types of products already. Big Data PP knows whether this fit my spending lifestyle or if it is a reach. Big Data PP determines if I can or cannot deduct it as a business expense. Even more so, Big Data PP calculates whether I need deductions at this point, against how many deductions I have already accrued in my fiscal year.

Big Data PP will charge me a different amount than the next sale to a different customer. An entity with a bigger bankroll may get charged more, or they may be offered a morphed package of sales for services I cannot afford: X downloads, unlimited downloads, additional webinars or custom services.

That’s not fair!

The same product is sold for different prices – because of how much I make? Well … yah. Want to write your Congressman or call your attorney? Think again.

Google (of course) is already doing it

And there are others. As early as 2000, Amazon was “price testing” multiple prices for the same DVD. They took some heat when consumers found out, so they dropped the practice (as well as the price.) In 2005, dynamic pricing came into play again when a University of Pennsylvania study noticed prices differed according browsing history – someone who had shopped competitors would get the more competitive price. In 2012, the Wall Street Journal reported how Orbitz ‘s offerings priced up to 30% higher for Mac users – Mac users have a higher income average.

Although the level of complexity may be surprising, we have become accustomed to cookies and their impact on our experience. Most don’t appreciate the impact though on the bottom line in the shopping cart.. The Atlantic explains:

the immense data trail you leave behind whenever you place something in your online shopping cart or swipe your rewards card at a store register, top economists and data scientists capable of turning this information into useful price strategies, and what one tech economist calls “the ability to experiment on a scale that’s unparalleled in the history of economics.” –

The price of the headphones Google recommends may depend on how budget-conscious your web history shows you to be, one study found. For shoppers, that means price—not the one offered to you right now, but the one offered to you 20 minutes from now, or the one offered to me, or to your neighbor—may become an increasingly unknowable thing.

https://www.theatlantic.com/magazine/archive/2017/05/how-online-shopping-makes-suckers-of-us-all/521448/

I’m going to need to see your zip code, ma’am

Physical or virtual, cost of living has always been tied to location. You can expect prices to be higher in New York City vice Greenbow, Alabama. I’ve known friends who won’t shop the grocery stores near their house because they are reputed to have higher prices. Then there’s the contra-pricing. I live in a resort area and to help out those of us who “suffer” through the throngs of vacationers to live the other nine months in peace, there is “locals only” pricing. That includes parking, some attractions and often secret pricing whispered over the phone ordering pizza or with the cashier for lattes.

Around the World

All the way back in the physical world, in Kenya most shopping is done in the very personal, one on one basis. Outside the upscale Village Market shopping mall in Nairobi, the Masai Market meets weekly in an open lot across the street. Advertised as an artisan fair of sorts, the goods are nevertheless likely to be found in a variety of souvenir shops anywhere in the country. It’s the experience though, another country’s version of selling what makes them unique.

I was excited. I was looking to find perhaps practical items to bring back home to share this lovely country’s culture. I was accompanied by a local. Although well-practiced at haggling from many countries around the world, I would need his native language and color to get a decent price. Otherwise, I would get the “mzungu” price, their understandable upcharge for an American, which I deserve. I didn’t expect to pay the same price as locals but I couldn’t afford the inflated price. The average monthly wage in Kenya is $76. Pricing is relative – to my location, my income, my nationality, my experience (traveler, savvy, job, education, common sense). I should pay more but not more than what the gonkulator tells me.

In the cloud

Where you live is already calculated in your costs and the virtual world does not provide escape any more than it does anonymity. My daughter realized a typical price difference phenomena when she went to college. The prices she knew from shopping online at home increased noticeably on her laptop while in Washington DC. She text a friend back home to shop simultaneously and compare. She was shocked to find the results. When she tried to change the zip code for delivery purposes – hoping to trick the system – the price refused to budge. Amazon knew where she was.

Final Morph

So pricing in the virtual world has not gone into our personal pocket books yet (that we know.) The online market does use digital information such as browsing history and location to triangulate your willingness to pay a certain price. This is still within the Small Data genre of capability, utilizing mean and median sources.

Big Data Price Point though – and I believe it will – knows YOUR personal bottom line. This is not a random variable calculated through the local and not so location population supply and demand. Big Data Price Point knows exactly what price to set for you from all your transaction history in stores and online, your taxes, your job, your household status, and much more.

Is that scary? Perhaps. But it is already very close to possible.

What would have happened with the Housing crash of the early 2000s? Big Data Price Point would have offered houses at rates that individuals could afford. Would it have curbed the domino effect or accelerated it that much more? Perhaps Big Data Price Point would have sensed the cumulative errors the isolated banks were unknowingly committing and eventually unwilling to admit. It’s a twist again for Mr Smith’s legacy to wrap around.

The world out there is waiting to sell you the next Best Thing and Big Data or not, marketing will continue to morph to find the magic price you are willing to pay. Big Data Price Point though will be oh-so intimately familiar with you and your money. In the end though, Big Data Price Point can only posture the question: will you buy?

The answer is still up to you.

8 Best application examples for blockchain in the US Navy (or your organization) – Part 3

Part 3

Expanding Operational: Blockchain Deployments for Impact

Expanding Operational: Blockchain Deployments for Impact

In Part 1, we explored the building blocks of blockchain – bitcoin and smart contracts. These top level basics of blockchain work quickly toward making more complex operations possible. Using step by step application, blockchain is already progressing right now in today’s industries.

In Part 2, we began moving from tactical to operational. The tactical utilization of bitcoin and smart contracts for stand-alone functions in test and evaluation morphed into the next level of operational with the isolated applications pulled into a third dimension, kinda like the third semester of calculus.

In this Part 3, we move further into operational with more complexity and subsequently a greater demand for coordination of resources. Using these novel concepts also further intertwines cultural change both internal and external to the organization. Instead of modifying or enhancing current business practices, blockchain replaces the process entirely.

Scary? Because replacing a current practice requires extensive planning and considerable disruption to the business process, the effort must exact a significant return on investment. So, let’s start with a strong and somewhat clean candidate for substituting a process entirely.

NO Sugar Coating

Blockchain can eliminate travel claims. Travel claims are a huge administrative burden to any organization and the Navy is no exception. The present digitized paper process although cumbersome has been necessary because travel claims historically have been riddled with fraud. A significant check and balance system has been necessary not only to counter the financial risk but also to hold together the integrity for faithful use of government funds.

The essence of blockchain is trust and the point of a travel claim has been verifying trust in a complicated (but not complex) process determining whether travel costs are true to the mission, in line with the command operations, and in adherence to multiple legal rules and guidelines.

By integrating smart contracts as the mission validation and order generation, a blockchain solution ensures the individual travel arrangements are only ticketed if they follow the smart contract requirements. A traveler can’t make a first class airline reservation to the Caribbean unless the orders include that provision. The traveler can’t accidentally book a rental car in Bangor Maine when he or she should be in Bangor Washington. They can’t book a hotel that exceeds the maximum lodging rate, again unless the orders permit such exceptions. Although the user-unfriendly Defense Travel System (DTS) flags such transgressions, it does so in a cryptic procedure that still requires verification in both the creation and execution of the process, adding administrative burden as well as risk – to the traveler, the authorizing official and subsequently to the organization.

Blockchain ledgers reside in several distributed processing nodes that miners use. As such, a complete copy of the database exists on each node. This makes it highly difficult for anyone to misuse the technology for fraudulent purposes. A person will need to fool all the miners in the system to create a fraudulent entry.

https://gomedici.com/how-blockchain-will-revolutionize-invoice-backed-financing/

Furthermore, changing travel arrangements, even to save the overall cost of the mission, requires significant staffing of command personnel as well as a 24/7 help desk. Resolving those changes works well sometimes and not other times, making the process clumsy and flex-deterrent. Travelers avoid modifications because the process often doesn’t cooperate and changes cause ambiguities in cost accountability, shifting the risk to the traveler. It’s safer for the traveler, but more expensive for the government, to stick to the original itinerary.

Execution

With smart contracts, the travel payment and former claim process actually execute simultaneously in real time as travel occurs. There is no back-side report which is today’s travel claim. When the traveler boards a plane, the transaction is verified and paid. When the traveler checks in the hotel, the night’s stay is paid, and the next, and the next until the traveler leaves. The metro ticket or Uber ride is verified – and paid – as it happens. Per diem clocks in at midnight every day. Per diem might morph into per minuta (prima/secunda) more relevantly. Each transaction is a block – communicated and verified as it happens.

Accountability

The immediate exchange is possible because accountability is pervasive and simultaneous. The command, the travel authority, and the financial auditing are all the distributed network. All receive identical copies that cannot be altered or corrupted as the traveler progresses. The smart contracts are created to only execute with valid transactions. By definition, all costs are incurred and audited in situ – as they happen. Travel claims are not necessary because the transaction cannot happen without valid quid pro quo. Get it?

Smart contracts also provide detailed record keeping on a Big Data level. Because the transactions are distributed to several sources, each monitors flags for transactions out of context. More efficient than verifying each travel claim, individual anomalies are not only detected and resolved more readily, the anomaly data provides feedback to the system as a whole.

http://dataconomy.com/2018/01/blockchain-will-kill-invoice/

Pay Off

The Defense Travel System (DTS) is basically a digitized paper process, enhanced with the ability to flag certain items and complete select transactions such as airline tickets, hotel reservations and rental cars (most of the time). A blockchain smart contract is a true digital process inherently built with trust to facilitate transactions without undue verification. Smart contracts would understand cost trade offs without manning redundant staffs.

APPLICATION 5: substitute the travel claim process with travel order smart contracts

Replacing a digitized paper process with a digital system is a foundation for operational blockchain applications. So let’s pick another example.

Pass the Test

Physical fitness is and always will be a personal measurement. No one can be your fitness for you; it’s a bank account only you create through deposits and withdrawals. However, it no longer needs to be a command function. Like most standardized tests, the Navy’s Physical Fitness Assessment (PFA) doesn’t measure fitness; it measures the ability to take the test. Blockchain can eliminate the administrative burden of physical fitness assessments currently required of each command by replacing them with continuous monitoring and smart contracts.

To understand the solution, let’s first look into the natural stasis of physical fitness testing within the Navy lens. Personal physical fitness – and the test thereof – falls into three categories.

Branches

The first group – hopefully the largest within the Navy – already routinely exercises without monitoring or testing, often far exceeding any written instructions. Whether they hit the gym three times a week or hit the trail every day or train for triathlons or all of the above, they just do it. Working out doesn’t have to make sense or be convenient, these folks know it feels good and it is good. They don’t need an instruction or direction, let alone a minimum test.

The second group does not have any workout regimen, yet they appear twice a year to pass the current fitness testing at whatever competency level. This “3 mile club” demonstrates that testing does not measure fitness so much as underline the administrative burden it takes to execute the command physical fitness assessment. They naturally pass the minimum standard and do not need training or workouts. They do not need further monitoring or assistance unless they begin missing the mark.

The third group does not make the minimum requirements. Falling somewhere in the range of how much or little they workout, these folks are potential for either direction. Not everyone has the natural ability to pass like the second group, but the the patterns of the first group’s regimen can be learned. Instead of the time spent testing the whole, the attention can be given to supporting these individuals that need help. If this group is failing, by this means we can focus the attention on those that need it the most, potentially by learning from those exceeding the bar.

The Minimum

One of the challenges to having standards testing is the minimum requirement itself. The bar is set surreptitiously to ensure that during the perceived arduous duty, Navy personnel have the physical capability to thrive in combat. Historically, the need for physical capability has fluctuated greatly. Even within the lens of today’s standards, the Navy is bounded by the overall physical fitness of the recruiting population, which is famously becoming less fit and overweight.

Within the Navy, too, the physical demands of a job vary from community to community. The pilot flying high-performance aircraft requires greater physical capability than the human resources officer ensuring the mission continues on the ground. The combat corpsman needs to be in better shape than the submariner.

The Rest of the Story

At the end of the day, the bar is set not so much to ensure physical fitness as to meet the variety of goals required for the Navy’s overall mission. End strength – the overall numbers in uniform – and Fit & Fill – the right skill sets sitting in the appropriate job – are highly challenging tasks even without any friction. PFA testing has often been used for force shaping – the tool to manage end strength and fit & fill. Thus the bar raises higher during times of economic downturn and reduced budgets in order to pare down numbers. The bar settles downward to retain Sailors in less austere times.

The Navy will grant a clean slate to nearly 50,000 sailors with fitness failures in their records, part of new shakeup for fleet-wide fitness rules announced Thursdsay.

https://www.navytimes.com/news/your-navy/2017/12/21/navy-grants-fitness-amnesty-to-48000-sailors-who-failed-test/

So what replaces “testing”?

Blockchain validates a transaction and for the PFA, a smart contract fulfills through individual accomplishment. That data aggregates into a Navy-wide physical fitness measurement. Wherein a standardized test measures the ability to pass a test at a given level, flipping that idea means recording actual fitness participation and determining fitness from the data. The smart contract fulfills the testing requirement, but the Big Data capture is actually the value that is important to understand. One more time – knowing how fit the Sailors are is far more valuable than passing any test. Time and policy has proven the test is variable. If followed effectively, this methodology actually relieves the need for a test.

Pic MCS Christopher Pratt/Navy

Pic MCS Christopher Pratt/Navy

What Does it Look Like?

Implementation would start with a morph. The first group is the model. Their individual workouts fulfill the requirements of physical fitness for the organization day after day. For this group, the smart contract obligations are integrally and continuously verified. For the second group, the 3 mile club makes a trip to the gym for specific measurements at a periodicity to fulfill the obligation, like an inoculation that has to be fulfilled. Finally, the third group gets flagged immediately, which provides the quality attention for establishing the routines of the first group.

Eventually, the fitness assessment would be seamless, ubiquitous, and transparent. Like your phone knows where you are, the Navy would know fitness as a whole and as individuals. The notion of twice a year testing is bound by the discrete, paper limitations in the box of analog thinking. Today’s Sailors are not draftees. The all-volunteer force are amassing millennials, born into a connected, continuous world. Making a digital process – not digitizing the current one – is what serves them as well as the Navy.

APPLICATION 6: substitute the Command Physical Fitness Assessment test with personal continuous fitness smart contracts

Next up: Part 4,

8 Best application examples for blockchain in the US Navy (or your organization) – Part 2

Part 2

Moving from Tactical to Operational: Contained Blockchain Deployments

In Part 1, we explored the building blocks of blockchain – bitcoin and smart contracts. These top level basics of blockchain work quickly toward more complex operations. Step by step block chain applications are progressing right now in today’s industries. It’s as easy as 1-2-3D.

Going 3D

As the tactical is utilizing bitcoin and smart contracts to execute stand-alone functions for test and evaluation, the next step is to pull the isolated applications into a third dimension, kinda like the third semester of calculus. For the US Navy, that opportunity could be implementation with Additive Manufacturing. Here’s why.

Nascent Technology.

Although 3D Printing was developed in the 1980s, the feasibility of metalworking for parts such as nuts and bolts didn’t mature until the 2010s. Thus the capability being explored is founded in practice and yet still molding (har har) into full application – in regard to the grand scale of spare parts that the US Navy demands. Because of the newness, the technology and its deployment are still pliable.

Bounded Issue.

Spare parts are a significant contribution and yet a contained subset of Navy needs for its mission. Although the traditional supply chain may be cumbersome and expensive, it works and provides a stable backup for production.

Appropriate Application.

3D printing is a developing technology itself that is fundamentally digital. Since additive manufacturing begins digitally and executes digitally, utilizing blockchain enhances the process by simultaneously replicating the team effort to the distributed ledger. Blockchain is adding to the system, not replacing it.

In the summer of 2017, the Navy actually announced plans to use blockchain for additive manufacturing. Numerous US government organizations such as the Center for Disease Control, Department of Homeland Security, Department of State, and Office of Personnel Management are also investigating the possibilities of blockchain in their missions.

Coming Together

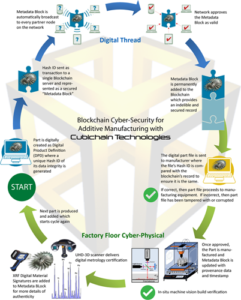

This graphic illustrates the interconnected capabilities of blockchain while still keeping the basic concepts in common buckets..

https://www.devteam.space/blog/the-rise-and-rise-of-blockchain-as-a-service/

Additive manufacturing itself is seen as a means to reduce costs and enhance the production cycle in both time and quality. With smart contracts, the additional benefit is simultaneous, irreversible duplication of the production cycle as it lives. All members of the process – start to finish – are active and aware of the function transactions. These members are the selected distributed network of nodes that are integral to the process: creators, engineers, users, legal, accounting and poignant chain of command. Because of the inherent sequential iteration foundation of blockchain, every version of the product – success, failure & in-between – is captured for the network. So mistakes are captured for remediation as well as historical reference functionally or administratively.

While the current means for supply aren’t going away, blockchain provides opportunity to further expand the benefits of smart contracts while minimizing the risk.

APPLICATION 3: expand testing smart contracts for consumption through the Additive Manufacturing deployment

Identity Management

“What’s in a name? That which we call a rose by any other name would smell as sweet.” – Shakespeare

As we move further into operational, identity management is another subset that is already in practice in industry. In a world of biomarkers and data exhaust, an individual can be identified by so much more than a name or a number. Using thumbprints or retinal scans never became as common as future-casts predicted, and even identification cards are losing their power. For example, when is the last time someone checked your ID card when paying with a credit card? The card actually says “not valid until signed,” but is the signature on the back verification for the vendor or opportunity for a forger? A new practice also allows for no signature of the receipt for purchases under $50, which indicates a proven algorithm of risk and return for chasing fraud according to a threshold. Credit agencies have many more mitigation strategies that subjugate the need to identify the user.

Today

Blockchain Identity Management (IDM) has not been accepted as common practice as yet. At this stage, developers are still exploring the technology and with the significant threat of information breeches, it is understandable financial institutions are leading the way. The potential though is being carried in real currency. Blockchain IDM startup Selfkey began its Initial Coin Offering (ICO) with the intent of selling a limited supply through the end of the month. They closed sales in 11 minutes when they reached over $21 million in investment. Last June, Civic raised $33 million through ICO, again closing the sales well ahead of schedule.

Another strong demand signal is creating a global identity standard, establishing a common practice for personal identification worldwide regardless of country of origin. Several startups are utilizing bitcoin, blockchain, open and secure networks to meet the demand signal of digital containment.

The blockchain holds no PII data, only verification signatures that cannot be reverse engineered.

https://shocard.com/shobadge/

Futurecast

Since blockchain is an ongoing accumulation of data blocks in a chain, think of a person’s identification that way. The person doesn’t change so much as their circumstances. Blockchain is used for identifying diamonds and high-end wines as they travel from point of origin to sales floor. The item itself doesn’t change; its journey defines its identity. Its value is certified by the steps it takes, which are captured as blocks in a chain. Only when the chain is broken or corrupted, then the item not only becomes scrutinized but also the sequence of events can be evaluated for interpretation and judgment.

The credit card company knows it is you more or less when you charge and the down side risk is minimal compared to the cost of deeper certainty. Individuals with security clearances by and large keep to the trust endowed. With blockchain identification, the organization and all the individuals who are trustworthy benefit when a single trustee breaking the chain can be identified immediately.

Blockchain Identity Management is a good operational application because it does not replace existing trust systems in place. The technology can be “bolted on” to a test unit for determining best practices. The bounded process can then be readily implemented and replicated. Identity management is ideal for blockchain implementation because the ongoing issues of personal identification risk are rising. A light bulb is needed because better candles are not sufficient to hinder hacking.

APPLICATION 4: develop identification blockchains for access to classified information

UP NEXT: 8 Blockchain Applications for the US Navy (or your organization): PART 3, Expanding Operational: Blockchain Deployments for Impact

Show Your Tatas! Big Data on Big Boobs, Bras & Breast Cancer

Big Data Takes on Big (Boob) Problems

Big Data application comes in many shapes and sizes and the Big Data Bra is Big Data wrapped in the silk and latex of lingerie.

It starts with a simple problem.

We have come a long way baby from corsets and elastic superstructures. The width and breadth of materials and configurations is overwhelming today and surpassed only by the running shoe industry in the utilitarian fashion spectrum.

Yet bras still don’t fit well. For those who “need” to wear them, they pinch and sag and ride up your back. You push and pull to pack ‘em in and in return they pooch and groan to comply. The straps spend a lifetime slowly paving a permanent groove over the shoulder. At the end of the day, within the privacy of the house, you pop the straining band – freedom!

Open the bra drawer on the dresser and the volume and variety (2 Vs) are apparent. There’s a bra for every occasion: bras for sweaters or for t-shirts or racer back or the all-day-at-work ones. The padded, the push-ups and the breast minimizers. Going to the gym or hitting the trail is a whole different world altogether, with sports bras equipped in extraordinary configurations to look good somehow while keeping everything from exploding.

Then there’s a whole undergarment wardrobe established just for after dark: the trying-to-be-sexy bra, the one bra that goes with THAT dress bra (the dress is sssoooo cute but only one bra works with it), and the worst ever compromise – the strapless bra. These all fall in the genre of i-can-last-x-hours at the restaurant/dinner party/gala event. There are pads and patches to replace bras and yes, in a pinch, the old school – duct tape.

One Size Does Not Fit All

And neither does band width and cup. Travel to any department store lingerie section and you’ll find a world of bra selection. Unless you don’t really need one, it’s not about size and color. You want what looks and feels great. Although you want something pretty, it’s about fit and form. This is a perfect example of lots of selection but not true fit.

So you spend as long as you can stand in the lingerie section. Like the 20 year old dating strategy, you try on bra after bra to find the perfect match, or at least one that will work for a while. It’s a simple affair then but as time drags on (downward I mean), it gets harder to meet one you like. You know it may fit in the store and “hold much promise” but one wash later and it is on the path unfortunately to break down. Keeping it clean means delicate washes and special care but it’s going to unravel on you sooner than you like.

So how about flipping the market from push to pull? A single customer doesn’t need all that selection. She needs The One(s) that fit her. Wouldn’t the manufacturer and retailer want to sell her The One(s) without holding the inventory of racks of choices and piles of discards? Instead of creating a huge variety of bras that consumers can squeeze into, how about starting with the customer and tailoring the selection to her exact dimensions?

“3D printing is creating everything from cars to human tissue. Facial recognition software commonly creates 3d renderings from its algorithms.Facial recognition technology captures data form the contours of a person’s face and then computes the ratios between each feature. Since no face is exactly the same, each person would generate a unique data set based on the shape, size, and location of their features. This mathematical output can be compared and sorted for matching and identification purposes or to track a person’s identity.”

In a world of 3D printing and people recognition software, you should be able to take some pics and make a bra – 10 bras – that specifically fit the pic.

That’s where Big Data comes in.

My Big Data Bra begins with uploading personal pictures to the Volume, Variety and Velocity of data from Victoria’s Secret or Bali or Warner or Free People or Maidenform or Wacoal. They design and manufacture a bra – a suite of bras if they’re good – and send them to me, not the store. They send me sales suggestions on replacements. I send them back my battle worn discards, which they computer analyze for fatigue to find the next best thing – for me – using the millions of data points around me. I can send new pictures to show adapting to life’s gravity effect or cosmetic repair thereof and they use the feedback for new bras. The cycle continues.

Nice Tata Thought, but How Does This Solve Big Problems with Big Data?

This consumerism creates a huge non-medical database of biometrics. These geo-tagged, anomyzed sources are deposited in data lakes with other data associations. It creates a new lens for researching breast cancer. Several breast cancer studies have already linked larger breasts to increased risk of cancer but the correlations are only suggestions.

More data would create more patterns and new opportunities. In one capability, the information is utilized by huge research organizations, using larger resources in order to view the Big(ger) picture.

Another angle is third party specialists create ways to analyze a personal signature against other Big Data sources – location, transactional, lifestyle, etc. – to assimilate breast cancer risk factors unique to that person. Third party providers could also suggest products and services to enhance breast life choices. There could be an app for using the pictures to tap into other big data resources for your overall health.

Further outside the box

Now blow this concept out of the bra. Whole body scans could do the same for total body health by incorporating pictures into your Big Data Medical Record. Providers use them as part of a holistic view of your health. Apps analysis helps assess your strengths and weaknesses. Big Data derives suggestions for effective and very personal results.

We live in a global collective. Now is only the beginning of its documentation. Big Data is the ability to utilize those billions of millions of data points. Let’s use Big Data to solve Big Problems.

Come and get it!!

My pre-launch book sale campaign is underway!! Here’s a taste of what’s up for sale!

What’s Your Problem

Solving world hunger or fending off COVID-19 are a different problem space than what’s for dinner. It’s pretty easy to follow that distinction; however, it’s important now because we do have much more information and many more tools for tackling the former. Twenty years ago, solving world hunger seemed to be a matter of getting the best minds together with a sufficient checkbook. The matrix of issues and identities though is much more complicated than just ideas and the finances to back them. Although it does take funds to make things happen, the funds don’t solve the problem of itself.

Better understanding the dimensions of problems determines solution space as well as tools and methods for addressing its aspects and effects – because it may or may not be solvable.

“We are doomed to choose” – Isaiah Berlin

The world and its problems are becoming more complex.

The world and its problems are becoming much more intricately entwined.

We all need to make better data decisions because subsequently the world we live in is subject to greater reactions and effects.

We gotta figure this out. We can’t just keep pushing more and more words and numbers into documents without providing a way to comprehend them better.

I may vomit. This part of writing a book is the hardest – actually trying to get people to buy it. For those who’ve been following my book posts on LinkedIn and Facebook, this is the pre-sale campaign I’ve been hinting at for the past few months. Now that I’m in the revision phases of my manuscript and have New Degree Press as my publisher, I now get to worry about funding the actual publication process!

If you’d like to help me on my publishing journey, please pre-order a signed copy by clicking here!

#amazonknowswhatsinyourwallet

#privacy #personalization

Wouldn’t it be nice if what you were just thinking of something you needed to get . . . and it came right to you. I imagine that’s the futuristic goal of #amazon. Haven’t they made our lives sssoooo much more convenient? On the one hand, it’s so easy to “shop around” at home. It’s so nice when a solution isn’t that hard to find. Right? But it’s a fine line to cross into where you’re not driving the train for what you need (or think you need.) Who is looking into your #personalinformation?

#theguardian reported last week on Amazon in “The Data Game: what Amazon knows about you and how to stop it.”. Some things you may know already, but others maybe not. Their source document, though, came from a #wired magazine article “Amazon’s Dark Secret: It Has Failed to Protect Your Data” (link in second comment) printed in November 2021 – that packs a punch:

“According to internal documents reviewed by Reveal from the Center for Investigative Reporting and WIRED, Amazon’s vast empire of customer data—its metastasizing record of what you search for, what you buy, what shows you watch, what pills you take, what you say to Alexa, and who’s at your front door—had become so sprawling, fragmented, and promiscuously shared within the company that the security division couldn’t even map all of it, much less adequately defend its borders.

In the name of speedy customer service, unbridled growth, and rapid-fire “invention on behalf of customers”—in the name of delighting you—Amazon had given broad swathes of its global workforce extraordinary latitude to tap into customer data at will. It was, as former Amazon chief information security officer Gary Gagnon calls it, a “free-for-all” of internal access to customer information. And as information security leaders warned, that free-for-all left the company wide open to “internal threat actors” while simultaneously making it inordinately difficult to track where all of Amazon’s data was flowing.”

Both articles are well worth your time to understand the power and vulnerability of #personalinformation. This is my take on #bigdata pricing and what the future of hyper-niche looks like. It’s in my upcoming book #thefallacyoflayingflat.

“The future – the Big Data pricing – is that the price will shift according to your ability as much as willingness to pay. That means the (online) webinar product that I didn’t want to pay $497 would have been further discounted to the $247 that would have made me uncomfortable but ready to use my credit card.

How is this possible? For one, it is a product (online webinar) with almost zero production cost. Unlike the widget which requires materials, labor, manufacturing, storage, and distribution, the digital warehouse doesn’t need a water supply or a janitor. The one stored copy (and backup) replicates instantly.

The next component is YOU. Big Data knows YOU along the same lines I mentioned that I use to make a purchase. Big Data figures out if I have enough cash or credit for the sale. Big Data knows if I spend money on these types of products already. Big Data knows whether this fits my spending lifestyle or if it is a reach. Big Data tells me I can or cannot deduct it as a business expense. Even more so, Big Data knows whether I need deductions at this point, against how many deductions I have already accrued in my fiscal year.

The more uncomfortable aspect? Big Data Pricing will charge me a different amount than the next sale to a different customer. An entity with a bigger bankroll may get charged more, or they may be offered a morphed package of sales for services I cannot afford: X downloads, unlimited downloads, additional webinars or custom services. In the lesser bankrolls, perhaps we will get a deeper discount or an extended opportunity to buy or a few more promptings in our email inbox.

“So pricing in the virtual world has not gone into our personal pocket books yet (that we know.) The online market does use digital information such as browsing history and location to triangulate your willingness to pay a certain price. This is still within the Small Data genre of capability, utilizing mean and median sources. Big Data Pricing though – and I believe it will – knows YOUR personal bottom line. This is not a random variable calculated through the local and not so location population supply and demand. Big Data Pricing knows exactly what price to set for you from all your transaction history in stores and online, your taxes, your job, your household status, and much more.

Is that scary? Perhaps. But it is already very close to possible.

The world out there is waiting to sell you the next Best Thing and Big Data or not, marketing will continue to morph to find the magic price you are willing to pay. Big Data Pricing though will be oh-so intimately familiar with you and your money. In the end though, Big Data Pricing can only posture the question: will you buy?

The answer is still up to you.

Life before Facebook Newsfeed

Almost 15 years ago, finding out what your friends were up to meant going to individual FB pages to check on them. Click. Read. Click. Read. In 2006, one of Zuckerberg’s famous notebook sketches came to life – news feed. Hence the scroll was born and we’ve been scrolling ever since. And Mark Zuckerberg has been answering time and again over its effect.

Now the norm, news feed’s arrival was wildly unpopular. Actually “This SUCKS” was the collective comment; complaints of creepy and stalker-like were the reaction. Ten percent of FB users joined groups (ironically on Facebook) demanding its removal. FB itself simmered internally with controversy over keeping it versus trashing it. The solution came as a compromise: opt-in privacy settings that allow control over who sees the news feed. Makes sense . . . because that’s what we’ve lived for years now. Scroll. Scroll. Scroll.

This isn’t a recall lesson for FB devops; this is for us to remember. 1) We consumers don’t like change for the most part. Unless there’s a huge visible upside, we fight the upsets to our expectations of how life should go. 2) Great ideas – even game changers – need tweaking. Don’t be afraid or too proud to see the faults in your work. Take the edits; listen to the critics. There’s going to be unintended consequences to any magical kingdom.

Mark Zuckerberg may always have the ego to conquer the world with his creation. But he’s also admitted to failure. He has also adapted to the responsibility. His famous “Move fast and break things” became people’s lives – sometimes literally. And I doubt he’s seen his last appearance before Congress.

https://www.wired.com/story/facebook-click-gap-news-feed-changes/

How well can we predict the future of #coronavirus?

Did COVID-19 start in a lab or naturally progress from animals to humans?

While there’s no shortage of speculation on #COVID-19 – especially how it got started – the answer(s) have yet to become clear. It’s doubtful we will ever learn all the specifics that seem simple enough to determine and necessary to prevent the next iteration. COVID-19 and the next pandemic are subject to the butterfly effect and likely will remain a cloudy opinion.

The butterfly effect is casually referred in pop culture but usually incorrectly. Edward Lorenz developed the concept while working meticulous calculations for predictions in the weather. He observed that the smallest deviations in a system can lead to dramatically different results. Hence a butterfly flapping its wings in Brazil causes tornadoes in Texas. This sensitivity to original conditions is why we can’t well predict the weather beyond 2 weeks.

His work led to the founding of chaotic study – which is not randomness. Chaotic systems are ordered but they vary – within limits. The solution to predicting the next pandemic is like hurricane watching. We can learn the pertinent path with variance but only know its exact movements as it unfolds.

“You’re touching too much”

#coronavirus #techhacks

Touching is tangency. We didn’t realize how much we touched stuff until health and life demanded you pay attention. Realizing how much you touch stuff other people touched is really creepy.

There can be an app for that – touching too much. Your phone’s sensitivity can pick up how much you touch things – door handles, drinking & eating, your wallet, private/public transportation/buildings. It knows your meeting sked. It can remind you to wash hands or wipe down surfaces. At given intervals too, it politely says, “You need to wipe me down too; I’m getting kinda funky.”

There’s also already an app for one of the dominant touch activities – paying for stuff. ApplePay and GooglePay (did you know you can add money as an attachment with gmail??) already provide touchless options for transactions from your phone. Even before the outbreak, I cringed whenever I pulled out a credit card for payment. Either the cashier is touching it (who touched a hundred before) or I’m touching a kiosk innumerable people before me have. Contactless is exactly that – no touching! Although quite prolific in Europe, many kiosks in the US have the symbol but it isn’t active. Having universal contactless transactions would hack a major TOUCH vulnerability.

Up next: Your health record: you can take it with you

Artificial Intelligence Rule #7: Close Enough

#closeenough

Rule #7 of artificialintelligence: close enough.

Number 7 is pretty far down the list, but “close enough” is an equally important concept for AI. Have you ever queried Google or Bing and gotten a single entry? Aka “the answer” to your question? No. I know I’ve gotten a single page of items in return (I ask some weird questions) but it always provides a menu of options.

The page ranking algorithms of Google are legendary and as closely guarded as military secrets. They aren’t carved in stone. Indeed the algorithms are manipulated in order to adjust for specific hacks as well as smoothing trends.