Part 3

Expanding Operational: Blockchain Deployments for Impact

Expanding Operational: Blockchain Deployments for Impact

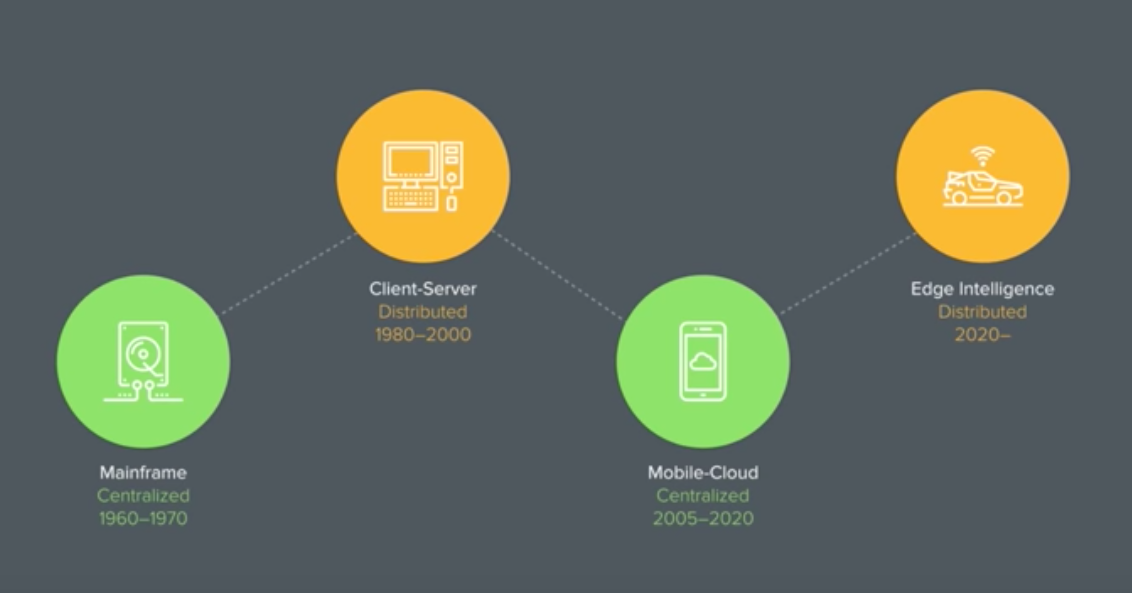

In Part 1, we explored the building blocks of blockchain – bitcoin and smart contracts. These top level basics of blockchain work quickly toward making more complex operations possible. Using step by step application, blockchain is already progressing right now in today’s industries.

In Part 2, we began moving from tactical to operational. The tactical utilization of bitcoin and smart contracts for stand-alone functions in test and evaluation morphed into the next level of operational with the isolated applications pulled into a third dimension, kinda like the third semester of calculus.

In this Part 3, we move further into operational with more complexity and subsequently a greater demand for coordination of resources. Using these novel concepts also further intertwines cultural change both internal and external to the organization. Instead of modifying or enhancing current business practices, blockchain replaces the process entirely.

Scary? Because replacing a current practice requires extensive planning and considerable disruption to the business process, the effort must exact a significant return on investment. So, let’s start with a strong and somewhat clean candidate for substituting a process entirely.

NO Sugar Coating

Blockchain can eliminate travel claims. Travel claims are a huge administrative burden to any organization and the Navy is no exception. The present digitized paper process although cumbersome has been necessary because travel claims historically have been riddled with fraud. A significant check and balance system has been necessary not only to counter the financial risk but also to hold together the integrity for faithful use of government funds.

The essence of blockchain is trust and the point of a travel claim has been verifying trust in a complicated (but not complex) process determining whether travel costs are true to the mission, in line with the command operations, and in adherence to multiple legal rules and guidelines.

By integrating smart contracts as the mission validation and order generation, a blockchain solution ensures the individual travel arrangements are only ticketed if they follow the smart contract requirements. A traveler can’t make a first class airline reservation to the Caribbean unless the orders include that provision. The traveler can’t accidentally book a rental car in Bangor Maine when he or she should be in Bangor Washington. They can’t book a hotel that exceeds the maximum lodging rate, again unless the orders permit such exceptions. Although the user-unfriendly Defense Travel System (DTS) flags such transgressions, it does so in a cryptic procedure that still requires verification in both the creation and execution of the process, adding administrative burden as well as risk – to the traveler, the authorizing official and subsequently to the organization.

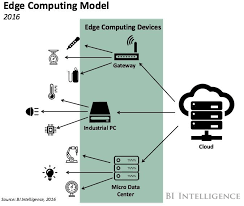

Blockchain ledgers reside in several distributed processing nodes that miners use. As such, a complete copy of the database exists on each node. This makes it highly difficult for anyone to misuse the technology for fraudulent purposes. A person will need to fool all the miners in the system to create a fraudulent entry.

https://gomedici.com/how-blockchain-will-revolutionize-invoice-backed-financing/

Furthermore, changing travel arrangements, even to save the overall cost of the mission, requires significant staffing of command personnel as well as a 24/7 help desk. Resolving those changes works well sometimes and not other times, making the process clumsy and flex-deterrent. Travelers avoid modifications because the process often doesn’t cooperate and changes cause ambiguities in cost accountability, shifting the risk to the traveler. It’s safer for the traveler, but more expensive for the government, to stick to the original itinerary.

Execution

With smart contracts, the travel payment and former claim process actually execute simultaneously in real time as travel occurs. There is no back-side report which is today’s travel claim. When the traveler boards a plane, the transaction is verified and paid. When the traveler checks in the hotel, the night’s stay is paid, and the next, and the next until the traveler leaves. The metro ticket or Uber ride is verified – and paid – as it happens. Per diem clocks in at midnight every day. Per diem might morph into per minuta (prima/secunda) more relevantly. Each transaction is a block – communicated and verified as it happens.

Accountability

The immediate exchange is possible because accountability is pervasive and simultaneous. The command, the travel authority, and the financial auditing are all the distributed network. All receive identical copies that cannot be altered or corrupted as the traveler progresses. The smart contracts are created to only execute with valid transactions. By definition, all costs are incurred and audited in situ – as they happen. Travel claims are not necessary because the transaction cannot happen without valid quid pro quo. Get it?

Smart contracts also provide detailed record keeping on a Big Data level. Because the transactions are distributed to several sources, each monitors flags for transactions out of context. More efficient than verifying each travel claim, individual anomalies are not only detected and resolved more readily, the anomaly data provides feedback to the system as a whole.

http://dataconomy.com/2018/01/blockchain-will-kill-invoice/

Pay Off

The Defense Travel System (DTS) is basically a digitized paper process, enhanced with the ability to flag certain items and complete select transactions such as airline tickets, hotel reservations and rental cars (most of the time). A blockchain smart contract is a true digital process inherently built with trust to facilitate transactions without undue verification. Smart contracts would understand cost trade offs without manning redundant staffs.

APPLICATION 5: substitute the travel claim process with travel order smart contracts

Replacing a digitized paper process with a digital system is a foundation for operational blockchain applications. So let’s pick another example.

Pass the Test

Physical fitness is and always will be a personal measurement. No one can be your fitness for you; it’s a bank account only you create through deposits and withdrawals. However, it no longer needs to be a command function. Like most standardized tests, the Navy’s Physical Fitness Assessment (PFA) doesn’t measure fitness; it measures the ability to take the test. Blockchain can eliminate the administrative burden of physical fitness assessments currently required of each command by replacing them with continuous monitoring and smart contracts.

To understand the solution, let’s first look into the natural stasis of physical fitness testing within the Navy lens. Personal physical fitness – and the test thereof – falls into three categories.

Branches

The first group – hopefully the largest within the Navy – already routinely exercises without monitoring or testing, often far exceeding any written instructions. Whether they hit the gym three times a week or hit the trail every day or train for triathlons or all of the above, they just do it. Working out doesn’t have to make sense or be convenient, these folks know it feels good and it is good. They don’t need an instruction or direction, let alone a minimum test.

The second group does not have any workout regimen, yet they appear twice a year to pass the current fitness testing at whatever competency level. This “3 mile club” demonstrates that testing does not measure fitness so much as underline the administrative burden it takes to execute the command physical fitness assessment. They naturally pass the minimum standard and do not need training or workouts. They do not need further monitoring or assistance unless they begin missing the mark.

The third group does not make the minimum requirements. Falling somewhere in the range of how much or little they workout, these folks are potential for either direction. Not everyone has the natural ability to pass like the second group, but the the patterns of the first group’s regimen can be learned. Instead of the time spent testing the whole, the attention can be given to supporting these individuals that need help. If this group is failing, by this means we can focus the attention on those that need it the most, potentially by learning from those exceeding the bar.

The Minimum

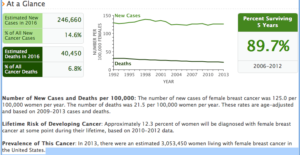

One of the challenges to having standards testing is the minimum requirement itself. The bar is set surreptitiously to ensure that during the perceived arduous duty, Navy personnel have the physical capability to thrive in combat. Historically, the need for physical capability has fluctuated greatly. Even within the lens of today’s standards, the Navy is bounded by the overall physical fitness of the recruiting population, which is famously becoming less fit and overweight.

Within the Navy, too, the physical demands of a job vary from community to community. The pilot flying high-performance aircraft requires greater physical capability than the human resources officer ensuring the mission continues on the ground. The combat corpsman needs to be in better shape than the submariner.

The Rest of the Story

At the end of the day, the bar is set not so much to ensure physical fitness as to meet the variety of goals required for the Navy’s overall mission. End strength – the overall numbers in uniform – and Fit & Fill – the right skill sets sitting in the appropriate job – are highly challenging tasks even without any friction. PFA testing has often been used for force shaping – the tool to manage end strength and fit & fill. Thus the bar raises higher during times of economic downturn and reduced budgets in order to pare down numbers. The bar settles downward to retain Sailors in less austere times.

The Navy will grant a clean slate to nearly 50,000 sailors with fitness failures in their records, part of new shakeup for fleet-wide fitness rules announced Thursdsay.

https://www.navytimes.com/news/your-navy/2017/12/21/navy-grants-fitness-amnesty-to-48000-sailors-who-failed-test/

So what replaces “testing”?

Blockchain validates a transaction and for the PFA, a smart contract fulfills through individual accomplishment. That data aggregates into a Navy-wide physical fitness measurement. Wherein a standardized test measures the ability to pass a test at a given level, flipping that idea means recording actual fitness participation and determining fitness from the data. The smart contract fulfills the testing requirement, but the Big Data capture is actually the value that is important to understand. One more time – knowing how fit the Sailors are is far more valuable than passing any test. Time and policy has proven the test is variable. If followed effectively, this methodology actually relieves the need for a test.

Pic MCS Christopher Pratt/Navy

Pic MCS Christopher Pratt/Navy

What Does it Look Like?

Implementation would start with a morph. The first group is the model. Their individual workouts fulfill the requirements of physical fitness for the organization day after day. For this group, the smart contract obligations are integrally and continuously verified. For the second group, the 3 mile club makes a trip to the gym for specific measurements at a periodicity to fulfill the obligation, like an inoculation that has to be fulfilled. Finally, the third group gets flagged immediately, which provides the quality attention for establishing the routines of the first group.

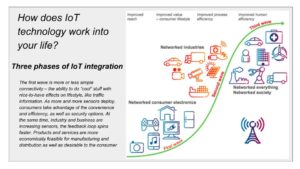

Eventually, the fitness assessment would be seamless, ubiquitous, and transparent. Like your phone knows where you are, the Navy would know fitness as a whole and as individuals. The notion of twice a year testing is bound by the discrete, paper limitations in the box of analog thinking. Today’s Sailors are not draftees. The all-volunteer force are amassing millennials, born into a connected, continuous world. Making a digital process – not digitizing the current one – is what serves them as well as the Navy.

APPLICATION 6: substitute the Command Physical Fitness Assessment test with personal continuous fitness smart contracts

Next up: Part 4,

![By Piergiuliano Chesi (Own work from scan) [Public domain], via Wikimedia Commons](https://colettegrail.com/wp-content/uploads/2018/06/1024px-Trans_World_Airlines_ticket_1973-08-03.jpg)